用Python爬取图片网站——基于BS4+多线程的处理,, 本业余玩家经过【好久的

用Python爬取图片网站——基于BS4+多线程的处理,, 本业余玩家经过【好久的

我有一个朋友,喜欢在一个图站看图(xie)片(zhen),光看就算了,他还有收集癖,想把网站的所有图片都下载下来,于是找我帮忙。

本业余玩家经过【好久的】研究,终于实现,写成本教程。本人经济学专业,编程纯属玩票,不足之处请指出,勿喷,谢谢。

本文分两部分:第一部分是基础方法,也就是单线程下爬图片的流程;第二部分是使用了多线程的功能,大大提高了爬取的效率。

前言

本次爬取基于的是BeautifulSoup+urllib/urllib2模块,Python另一个高效的爬虫模块叫Scrapy,但是我至今没研究懂,因此暂时不用。

基础流程

说明

此次爬取,在输入端仅需要一个初始网址(为避免彼网站找我麻烦,就以URL代替),以及文件保存路径(为保护我隐私,以PATH代替),大家在阅读代码时敬请注意。

从该网站下载图片以及文件处理有如下几步:【我要是会画流程图就好了】

1.打开网站首页,获得总页数,获得每个专辑的链接;

2.点进某专辑,获得专辑的标题作为保存的文件夹名,并获得该专辑的页数;

3.获取每个图片的链接

4.下载图片,以网站上图片的文件名保存至本地,同时对应第2步的文件夹。

代码和解释

# -*- coding: utf-8 -*-

"""

@author: Adam

"""

import urllib2, urllib, os

from bs4 import BeautifulSoup

root = PATH

url = URL

req = urllib2.Request(url)

content = urllib2.urlopen(req).read()

soup = BeautifulSoup(content, "lxml")

page = soup.find_all('a')

pagenum1 = page[-3].get_text() #注1

for i in range(0, int(pagenum1) + 1):

if i == 0:

url1 = URL

else:

url1 = URL + str(i+1) + ".html" #注2

req1 = urllib2.Request(url1)

#

#print url

content1 = urllib2.urlopen(req1).read()

soup1 = BeautifulSoup(content1, "lxml")

table = soup1.find_all('td')

title = soup1.find_all('div', class_ = 'title') #注3

#print title

for j in range(1, 19):

folder = title[j-1].get_text()

folder = folder.replace('\\\\n', '') #注4

curl=table[j].a['href'] #注5

purl = URL+curl

#Second Page

preq = urllib2.Request(purl)

pcontent = urllib2.urlopen(preq).read()

psoup = BeautifulSoup(pcontent, "lxml")

page2 = psoup.find_all('a')

pagenum2 = page2[-4].get_text()

if not os.path.exists(root + folder):

os.mkdir(root + folder)

else:

os.chdir(root + folder)

#print folder

for t in range(1, int(pagenum2) + 1):

if t == 1:

purl1 = purl

else:

purl1 = purl[:-5] + '-' + str(t) + '.html'

preq2 = urllib2.Request(purl1)

pcontent2 = urllib2.urlopen(preq2).read()

psoup2 = BeautifulSoup(pcontent2, "lxml")

picbox = psoup2.find_all('div', class_ = 'pic_box') #注6

for k in range(1,7):

filename = root + folder + "/" + str(k+6*(t-1)) + ".jpg"

if not os.path.exists(filename):

try:

pic = picbox[k].find('img')

piclink = pic.get('src') #注7

urllib.urlretrieve(piclink, filename)

except:

continue

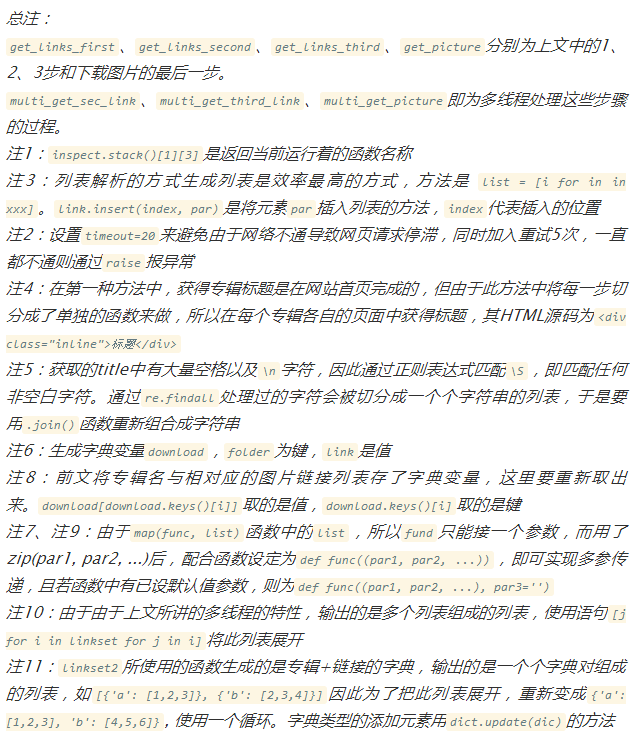

注1:获取页码的方法,因为页码的HTML源码为<a href="/albums/XiuRen-27.html">27</a>

注2:因为我发现该网站翻页后的网址即为首页网址后加页码数字

注3:专辑标题的HTML源码为<div class=”title”><span class=”name”>专辑标题</span></div>

注4:将专辑标题命名为文件夹名,这里要给title字符串做些处理,下问讲

注5:放每个专辑自己链接的HTML源码为<td><a href="/photos/XiuRen-5541.html" target="_blank"></a></td>

注6、7:放图片的HTML源码为<div class="pic_box"><i.m.g(为防系统认为有图片) src=" " alt=" "></div>

通过find_all('div', class_ = 'pic_box')找到放图的区块,然后用find('img')找到图片的标签,再用get('src')的方法获取图片链接

通过以上的代码,下载所有图片并保存到对应的文件夹的流程就笨拙地完成了。该方法效率极低,首先是单线程操作,其次用了N次嵌套循环,因此我想到了借助多线程提高效率的方式。

多线程方法

介绍

Python多线程的方法在网上有很多文章介绍,但是都好(是)像(我)很(水)复(平)杂(低),后来我发现了一个模块,寥寥几行就实现了功能。

from multiprocessing.dummy import Pool as ThreadPool

import urllib2url = “http://www.cnblogs.com“

urls = [url] * 50

pool = ThreadPool(4)

results = pool.map(urllib2.urlopen, urls)

pool.close()

pool.join()

其中,urls是一个列表,该模块正是用map(func, list)的方法将list的元素从前到后送入至func运算。results传出的是一个列表类型

在本案例中应用

Python

<span class="hljs-comment"># -*- coding: utf-8 -*-</span>

<span class="hljs-string">"""

@author: Adam

"""</span>

<span class="hljs-keyword">import</span> os

<span class="hljs-keyword">import</span> urllib2

<span class="hljs-keyword">import</span> urllib

<span class="hljs-keyword">from</span> bs4 <span class="hljs-keyword">import</span> BeautifulSoup

<span class="hljs-keyword">import</span> re

<span class="hljs-keyword">import</span> inspect

<span class="hljs-keyword">from</span> multiprocessing.dummy <span class="hljs-keyword">import</span> Pool <span class="hljs-keyword">as</span> ThreadPool

<span class="hljs-keyword">from</span> multiprocessing <span class="hljs-keyword">import</span> cpu_count <span class="hljs-keyword">as</span> cpu <span class="hljs-comment">#进程池个数等于CPU个数</span>

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">read_url</span><span class="hljs-params">(url)</span>:</span>

req = urllib2.Request(url)

fails = <span class="hljs-number">0</span>

<span class="hljs-keyword">while</span> fails < <span class="hljs-number">5</span>:

<span class="hljs-keyword">try</span>:

content = urllib2.urlopen(req, timeout=<span class="hljs-number">20</span>).read()

<span class="hljs-keyword">break</span>

<span class="hljs-keyword">except</span>:

fails += <span class="hljs-number">1</span>

<span class="hljs-keyword">print</span> inspect.stack()[<span class="hljs-number">1</span>][<span class="hljs-number">3</span>] + <span class="hljs-string">' occused error'</span> <span class="hljs-comment">#注1</span>

<span class="hljs-keyword">raise</span> <span class="hljs-comment">#注2</span>

soup = BeautifulSoup(content, <span class="hljs-string">"lxml"</span>)

<span class="hljs-keyword">return</span> soup

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">get_links_first</span><span class="hljs-params">(url)</span>:</span>

soup = read_url(url)

page = soup.find_all(<span class="hljs-string">'a'</span>)

pagenum = page[-<span class="hljs-number">3</span>].get_text()

link = [url[:-<span class="hljs-number">5</span>] + <span class="hljs-string">'-'</span> + str(i) + <span class="hljs-string">'.html'</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">2</span>, int(pagenum)+<span class="hljs-number">1</span>)]

link.insert(<span class="hljs-number">0</span>, url) <span class="hljs-comment">#注3</span>

<span class="hljs-keyword">return</span> link

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">get_links_second</span><span class="hljs-params">(url)</span>:</span>

purlist = []

soup = read_url(url)

table = soup.find_all(<span class="hljs-string">'td'</span>)

<span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> range(<span class="hljs-number">1</span>,<span class="hljs-number">19</span>):

<span class="hljs-keyword">try</span>:

curl = table[j].a[<span class="hljs-string">'href'</span>]

purl = URL + curl

<span class="hljs-keyword">if</span> purl <span class="hljs-keyword">not</span> <span class="hljs-keyword">in</span> purlist:

<span class="hljs-comment"># print purl</span>

purlist.append(purl)

<span class="hljs-keyword">except</span>:

<span class="hljs-keyword">continue</span>

<span class="hljs-keyword">return</span> purlist

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">get_links_third</span><span class="hljs-params">(url)</span>:</span>

<span class="hljs-comment"># print url</span>

download = {}

soup = read_url(url)

page = soup.find_all(<span class="hljs-string">'a'</span>)

pagenum = page[-<span class="hljs-number">4</span>].get_text()

link = [url[:-<span class="hljs-number">5</span>] + <span class="hljs-string">'-'</span> + str(i) + <span class="hljs-string">'.html'</span> <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(<span class="hljs-number">2</span>, int(pagenum)+<span class="hljs-number">1</span>)]

link.insert(<span class="hljs-number">0</span>, url)

title = soup.find_all(<span class="hljs-string">'div'</span>, class_ = <span class="hljs-string">'inline'</span>)[<span class="hljs-number">0</span>].get_text() <span class="hljs-comment">#注4</span>

title = re.findall(<span class="hljs-string">'\\S'</span>, title)

folder = <span class="hljs-string">''</span>.join(title) <span class="hljs-comment">#注5</span>

<span class="hljs-comment"># print folder</span>

download[folder] = link <span class="hljs-comment">#注6</span>

<span class="hljs-keyword">return</span> download

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">get_picture</span><span class="hljs-params">((url, folder))</span>:</span> <span class="hljs-comment">#注7</span>

soup = read_url(url)

picbox = soup.find_all(<span class="hljs-string">'div'</span>, class_ = <span class="hljs-string">'pic_box'</span>)

<span class="hljs-keyword">for</span> k <span class="hljs-keyword">in</span> range(<span class="hljs-number">1</span>, <span class="hljs-number">7</span>):

<span class="hljs-keyword">try</span>:

pic = picbox[k].find(<span class="hljs-string">'img'</span>)

piclink = pic.get(<span class="hljs-string">'src'</span>)

filename = folder + <span class="hljs-string">'/'</span> + os.path.basename(piclink)

<span class="hljs-keyword">print</span> folder

<span class="hljs-keyword">if</span> <span class="hljs-keyword">not</span> os.path.exists(filename):

urllib.urlretrieve(piclink, filename)

<span class="hljs-comment"># print filename</span>

<span class="hljs-keyword">except</span>:

<span class="hljs-comment"># raise</span>

<span class="hljs-keyword">continue</span>

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">multi_get_sec_link</span><span class="hljs-params">(url)</span>:</span>

pool = ThreadPool(cpu())

linkset = pool.map(get_links_second, url)

pool.close()

pool.join()

<span class="hljs-keyword">return</span> linkset

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">multi_get_third_link</span><span class="hljs-params">(url)</span>:</span>

pool = ThreadPool(<span class="hljs-number">4</span>)

linkset = pool.map(get_links_third, url)

pool.close()

pool.join()

<span class="hljs-keyword">return</span> linkset

<span class="hljs-function"><span class="hljs-keyword">def</span> <span class="hljs-title">multi_get_picture</span><span class="hljs-params">(download, root=PATH)</span>:</span>

pool = ThreadPool(<span class="hljs-number">4</span>)

<span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> range(len(download)):

picurlset = download[download.keys()[i]]

folder = root + <span class="hljs-string">'/'</span> + download.keys()[i] <span class="hljs-comment">#注8</span>

<span class="hljs-comment"># print folder</span>

<span class="hljs-keyword">if</span> <span class="hljs-keyword">not</span> os.path.exists(folder):

os.mkdir(folder)

<span class="hljs-comment"># else:</span>

<span class="hljs-comment"># os.chdir(folder)</span>

pool.map(get_picture, zip(picurlset, folder)) <span class="hljs-comment">#注9</span>

pool.close()

pool.join()

<span class="hljs-keyword">if</span> __name__ == <span class="hljs-string">'__main__'</span>:

url = URL

linkset = multi_get_sec_link(get_links_first(url))

linkset = [j <span class="hljs-keyword">for</span> i <span class="hljs-keyword">in</span> linkset <span class="hljs-keyword">for</span> j <span class="hljs-keyword">in</span> i] <span class="hljs-comment">#注10</span>

linkset2 = multi_get_third_link(linkset)

finalset = {}

<span class="hljs-keyword">for</span> dic <span class="hljs-keyword">in</span> linkset2:

finalset.update(dic) <span class="hljs-comment">#注11</span>

multi_get_picture(finalset)

附加

由于我处的网络环境实在是太奇葩了,即使网页打得开、视频流畅,在用urlopen时却各种timeout,因此我添加了以下代码,将最后的链接字典保存成一个文件。

f = open(FILENAME,"r") f.write(str(finalset)) f.close()

之后直接读取该文件。同时为了让程序在无数次蛋疼的timeout报错中自己不断重试,又进一步增加了循环语句。

f = open("/Users/Adam/Documents/Python_Scripts/Photos/links.txt","r")

finalset = eval(f.read())

while True:

try:

multi_get_picture(finalist) #转成字符串的字典再转回来

break

except:

continue

写到这里,本程序就写完了,接下来就是跑起来然后看着一个个文件夹在电脑里冒出来,然后一个个图片如雨后春笋般出现吧。

以上です

最后,读者可修改我的代码为自己所用,文章转载请注明出处。(虽然我知道没人会转的)

相关内容

- python使用BeautifulSoup解析html获得网站的百度收录量,,

- python beautifulsoup分析网页示例,,beautifulsoa

- BeautifulSoup中文乱码问题解决,beautifulsoup乱码,Beautiful

- beautifulsoup 获得节点的下一个元素,beautifulsoup节点,be

- python通过BeautifulSoup分页网页中的超级链接,,from Beaut

- python beautifulsoup 获得innerHTML内容,,python的beaut

- Python使用requests及BeautifulSoup构建爬虫实例代码,

- Python利用BeautifulSoup解析Html的方法示例,

- python爬虫入门教程--HTML文本的解析库BeautifulSoup(四),

- python 3利用BeautifulSoup抓取div标签的方法示例,beautiful

评论关闭