python瀹炵幇绾挎€у洖褰掍箣寮规€х綉鍥炲綊,,鏍囩锛?a hre

python瀹炵幇绾挎€у洖褰掍箣寮规€х綉鍥炲綊,,鏍囩锛?a hre

鏍囩锛?a href='http://www.byrx.net/so/1/determine' title='determine'>determine

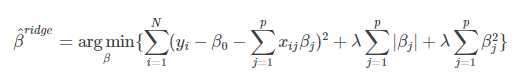

寮规€х綉鍥炲綊鏄痩asso鍥炲綊鍜屽箔鍥炲綊鐨勭粨鍚堬紝鍏朵唬浠峰嚱鏁颁负锛?/p>

鑻ヤ护锛屽垯

鐢辨鍙煡锛屽脊鎬х綉鐨勬儵缃氱郴鏁?img alt="鎶€鏈浘鐗? src="/uploads/allimg/201115/14245610a-3.png" >鎭板ソ涓哄箔鍥炲綊缃氬嚱鏁板拰Lasso缃氬嚱鏁扮殑涓€涓嚫绾挎€х粍鍚堬紟褰?span id="MathJax-Element-55-Frame" class="MathJax" data-mathml="<math xmlns="http://www.w3.org/1998/Math/MathML"><mi>α</mi><mo>=</mo><mn>0</mn></math>">α=0鏃讹紝寮规€х綉鍥炲綊鍗充负宀洖褰掞紱褰?nbsp;α=1鏃讹紝寮规€х綉鍥炲綊鍗充负Lasso鍥炲綊锛庡洜姝わ紝寮规€х綉鍥炲綊鍏兼湁Lasso鍥炲綊鍜屽箔鍥炲綊鐨勪紭鐐癸紝鏃㈣兘杈惧埌鍙橀噺閫夋嫨鐨勭洰鐨勶紝鍙堝叿鏈夊緢濂界殑缇ょ粍鏁堝簲銆?/span>

涓婅堪瑙i噴鎽樿嚜锛?strong>https://blog.csdn.net/weixin_41500849/article/details/80447501

鎺ヤ笅鏉ユ槸瀹炵幇浠g爜锛屼唬鐮佹潵婧愶細https://github.com/eriklindernoren/ML-From-Scratch

棣栧厛杩樻槸瀹氫箟涓€涓熀绫伙紝鍚勭绾挎€у洖褰掗兘闇€瑕佺户鎵胯鍩虹被锛?/p>

class Regression(object): """ Base regression model. Models the relationship between a scalar dependent variable y and the independent variables X. Parameters: ----------- n_iterations: float The number of training iterations the algorithm will tune the weights for. learning_rate: float The step length that will be used when updating the weights. """ def __init__(self, n_iterations, learning_rate): self.n_iterations = n_iterations self.learning_rate = learning_rate def initialize_weights(self, n_features): """ Initialize weights randomly [-1/N, 1/N] """ limit = 1 / math.sqrt(n_features) self.w = np.random.uniform(-limit, limit, (n_features, )) def fit(self, X, y): # Insert constant ones for bias weights X = np.insert(X, 0, 1, axis=1) self.training_errors = [] self.initialize_weights(n_features=X.shape[1]) # Do gradient descent for n_iterations for i in range(self.n_iterations): y_pred = X.dot(self.w) # Calculate l2 loss mse = np.mean(0.5 * (y - y_pred)**2 + self.regularization(self.w)) self.training_errors.append(mse) # Gradient of l2 loss w.r.t w grad_w = -(y - y_pred).dot(X) + self.regularization.grad(self.w) # Update the weights self.w -= self.learning_rate * grad_w def predict(self, X): # Insert constant ones for bias weights X = np.insert(X, 0, 1, axis=1) y_pred = X.dot(self.w) return y_pred

鐒跺悗鏄脊鎬х綉鍥炲綊鐨勬牳蹇冿細

class l1_l2_regularization(): """ Regularization for Elastic Net Regression """ def __init__(self, alpha, l1_ratio=0.5): self.alpha = alpha self.l1_ratio = l1_ratio def __call__(self, w): l1_contr = self.l1_ratio * np.linalg.norm(w) l2_contr = (1 - self.l1_ratio) * 0.5 * w.T.dot(w) return self.alpha * (l1_contr + l2_contr) def grad(self, w): l1_contr = self.l1_ratio * np.sign(w) l2_contr = (1 - self.l1_ratio) * w return self.alpha * (l1_contr + l2_contr)

鎺ョ潃鏄脊鎬х綉鍥炲綊鐨勪唬鐮侊細

class ElasticNet(Regression): """ Regression where a combination of l1 and l2 regularization are used. The ratio of their contributions are set with the 鈥榣1_ratio鈥?parameter. Parameters: ----------- degree: int The degree of the polynomial that the independent variable X will be transformed to. reg_factor: float The factor that will determine the amount of regularization and feature shrinkage. l1_ration: float Weighs the contribution of l1 and l2 regularization. n_iterations: float The number of training iterations the algorithm will tune the weights for. learning_rate: float The step length that will be used when updating the weights. """ def __init__(self, degree=1, reg_factor=0.05, l1_ratio=0.5, n_iterations=3000, learning_rate=0.01): self.degree = degree self.regularization = l1_l2_regularization(alpha=reg_factor, l1_ratio=l1_ratio) super(ElasticNet, self).__init__(n_iterations, learning_rate) def fit(self, X, y): X = normalize(polynomial_features(X, degree=self.degree)) super(ElasticNet, self).fit(X, y) def predict(self, X): X = normalize(polynomial_features(X, degree=self.degree)) return super(ElasticNet, self).predict(X)

鍏朵腑娑夊強鍒扮殑涓€浜涘嚱鏁板彲鍙傝€冿細https://www.cnblogs.com/xiximayou/p/12802868.html

鏈€鍚庢槸杩愯涓诲嚱鏁帮細

from __future__ import print_functionimport matplotlib.pyplot as pltimport syssys.path.append("/content/drive/My Drive/learn/ML-From-Scratch/")import numpy as npimport pandas as pd# Import helper functionsfrom mlfromscratch.supervised_learning import ElasticNetfrom mlfromscratch.utils import k_fold_cross_validation_sets, normalize, mean_squared_errorfrom mlfromscratch.utils import train_test_split, polynomial_features, Plotdef main(): # Load temperature data data = pd.read_csv(鈥?/span>mlfromscratch/data/TempLinkoping2016.txt鈥?/span>, sep="\t") time = np.atleast_2d(data["time"].values).T temp = data["temp"].values X = time # fraction of the year [0, 1] y = temp X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4) poly_degree = 13 model = ElasticNet(degree=15, reg_factor=0.01, l1_ratio=0.7, learning_rate=0.001, n_iterations=4000) model.fit(X_train, y_train) # Training error plot n = len(model.training_errors) training, = plt.plot(range(n), model.training_errors, label="Training Error") plt.legend(handles=[training]) plt.title("Error Plot") plt.ylabel(鈥?/span>Mean Squared Error鈥?/span>) plt.xlabel(鈥?/span>Iterations鈥?/span>) plt.savefig("test1.png") plt.show() y_pred = model.predict(X_test) mse = mean_squared_error(y_test, y_pred) print ("Mean squared error: %s (given by reg. factor: %s)" % (mse, 0.05)) y_pred_line = model.predict(X) # Color map cmap = plt.get_cmap(鈥?/span>viridis鈥?/span>) # Plot the results m1 = plt.scatter(366 * X_train, y_train, color=cmap(0.9), s=10) m2 = plt.scatter(366 * X_test, y_test, color=cmap(0.5), s=10) plt.plot(366 * X, y_pred_line, color=鈥?/span>black鈥?/span>, linewidth=2, label="Prediction") plt.suptitle("Elastic Net") plt.title("MSE: %.2f" % mse, fontsize=10) plt.xlabel(鈥?/span>Day鈥?/span>) plt.ylabel(鈥?/span>Temperature in Celcius鈥?/span>) plt.legend((m1, m2), ("Training data", "Test data"), loc=鈥?/span>lower right鈥?/span>) plt.savefig("test2.png") plt.show()if __name__ == "__main__": main()缁撴灉锛?/p>

Mean squared error: 11.232800207362782 (given by reg. factor: 0.05)

python瀹炵幇绾挎€у洖褰掍箣寮规€х綉鍥炲綊

鏍囩锛?a href='http://www.byrx.net/so/1/determine' title='determine'>determine

鍘熸枃鍦板潃锛歨ttps://www.cnblogs.com/xiximayou/p/12808904.html

相关内容

- python detect.py,,python det

- python相关小技巧(保持更新),,1、查看导入库的类属

- python开发飞机大战,,1.使用pygame

- python 删除文件夹,,只能删除空文件夹,删

- python-CuPy--加速Numpy,,python-CuP

- python之__str__用法,, 1 class P

- python中文件的读取与写入以及os模块,,1.文件读取的三

- python isdigit()函数,python3中format函数,isdigit()

- python实现浏览器打开指定url,,关键webbrows

- Python Faker随机生成测试数据(干货),,前言 Faker是

评论关闭