吴裕雄 python 机器学习-DMT(1),,import num

吴裕雄 python 机器学习-DMT(1),,import num

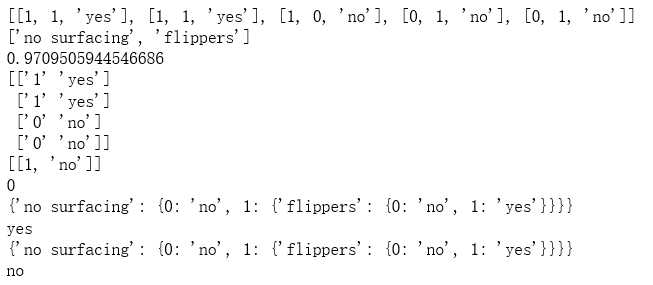

import numpy as npimport operator as opfrom math import logdef createDataSet(): dataSet = [[1, 1, ‘yes‘], [1, 1, ‘yes‘], [1, 0, ‘no‘], [0, 1, ‘no‘], [0, 1, ‘no‘]] labels = [‘no surfacing‘,‘flippers‘] return dataSet, labelsdataSet,labels = createDataSet()print(dataSet)print(labels)def calcShannonEnt(dataSet): labelCounts = {} for featVec in dataSet: currentLabel = featVec[-1] if(currentLabel not in labelCounts.keys()): labelCounts[currentLabel] = 0 labelCounts[currentLabel] += 1 shannonEnt = 0.0 rowNum = len(dataSet) for key in labelCounts: prob = float(labelCounts[key])/rowNum shannonEnt -= prob * log(prob,2) return shannonEntshannonEnt = calcShannonEnt(dataSet)print(shannonEnt)def splitDataSet(dataSet, axis, value): retDataSet = [] for featVec in dataSet: if(featVec[axis] == value): reducedFeatVec = featVec[:axis] reducedFeatVec.extend(featVec[axis+1:]) retDataSet.append(reducedFeatVec) return retDataSetretDataSet = splitDataSet(dataSet,1,1)print(np.array(retDataSet))retDataSet = splitDataSet(dataSet,1,0)print(retDataSet)def chooseBestFeatureToSplit(dataSet): numFeatures = np.shape(dataSet)[1]-1 baseEntropy = calcShannonEnt(dataSet) bestInfoGain = 0.0 bestFeature = -1 for i in range(numFeatures): featList = [example[i] for example in dataSet] uniqueVals = set(featList) newEntropy = 0.0 for value in uniqueVals: subDataSet = splitDataSet(dataSet, i, value) prob = len(subDataSet)/float(len(dataSet)) newEntropy += prob * calcShannonEnt(subDataSet) infoGain = baseEntropy - newEntropy if (infoGain > bestInfoGain): bestInfoGain = infoGain bestFeature = i return bestFeature bestFeature = chooseBestFeatureToSplit(dataSet)print(bestFeature)def majorityCnt(classList): classCount={} for vote in classList: if(vote not in classCount.keys()): classCount[vote] = 0 classCount[vote] += 1 sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True) return sortedClassCount[0][0]def createTree(dataSet,labels): classList = [example[-1] for example in dataSet] if(classList.count(classList[0]) == len(classList)): return classList[0] if len(dataSet[0]) == 1: return majorityCnt(classList) bestFeat = chooseBestFeatureToSplit(dataSet) bestFeatLabel = labels[bestFeat] myTree = {bestFeatLabel:{}} del(labels[bestFeat]) featValues = [example[bestFeat] for example in dataSet] uniqueVals = set(featValues) for value in uniqueVals: subLabels = labels[:] myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels) return myTreemyTree = createTree(dataSet,labels)print(myTree)def classify(inputTree,featLabels,testVec): for i in inputTree.keys(): firstStr = i break secondDict = inputTree[firstStr] featIndex = featLabels.index(firstStr) key = testVec[featIndex] valueOfFeat = secondDict[key] if isinstance(valueOfFeat, dict): classLabel = classify(valueOfFeat, featLabels, testVec) else: classLabel = valueOfFeat return classLabelfeatLabels = [‘no surfacing‘, ‘flippers‘]classLabel = classify(myTree,featLabels,[1,1])print(classLabel)import pickledef storeTree(inputTree,filename): fw = open(filename,‘wb‘) pickle.dump(inputTree,fw) fw.close() def grabTree(filename): fr = open(filename,‘rb‘) return pickle.load(fr)filename = "D:\\mytree.txt"storeTree(myTree,filename)mySecTree = grabTree(filename)print(mySecTree)featLabels = [‘no surfacing‘, ‘flippers‘]classLabel = classify(mySecTree,featLabels,[0,0])print(classLabel)

吴裕雄 python 机器学习-DMT(1)

评论关闭